A Well-Oiled Machine - An Exploration of Concurrent Programming in Java

We see concurrency every day on computer systems and often take it for granted that they can do more than one thing at a time. You can have a web browser open while working on a word processor while using other programs to download files, listen to music and more simultaneously. Even the individual programs have to perform several functions at once, for instance, your web browser must retrieve information from other parts of the web while also updating your display, responding to keystrokes and mouse clicks and handling network requests and file access. This is all made possible through Concurrent Programming.

Processes and Threads

There are two basic units of execution in concurrent programming, processes and threads. In Java, we are mostly interested in threads, but processes are still important.

Processes

Threads

Concurrency in Java

Creating and Running a Thread

There are two main ways to create a thread in Java.

• Provide a Runnable object with a single method - run - that contains the code executed in the thread. This is then passed to the Thread constructor as seen below:

• Provide a Thread subclass. Here the Thread class implements Runnable, but the run method does nothing. This is shown below:

There are benefits to using both methods. On one hand, the first method is more general as the Runnable object can subclass something other than Thread. On the other hand, the second method is easier to use in simple applications, but the task class must be a descendant of Thread.

Important Note: if we don't override the run() method, no error will be flashed and the run() method of the Thread class (which has been empty implemented) will be executed. As such, there will be no output for this thread.

Thread States

A thread can exist at any point in time in one of the following states:

- New - When a new thread is created, it has not started to run, its code has not yet been run and it hasn't started to execute.

- Runnable - When a thread is ready to run, it is moved to this state. It may be either actually running or just ready to run at any moment and it is the thread scheduler's job to give the thread time to run.

- Blocked - When a thread is temporarily inactive, if the object it wants to use is currently in use by another thread (locked), it will move to this state. When the resource is available (unlocked) it moves back to a runnable state.

- Waiting - When a thread is temporarily inactive, if it is waiting for a signal from another thread before proceeding, it will be placed in this state until it is received. It then moves back to a runnable state.

- Timed Waiting - If the thread calls a method with a time-out parameter it will be places in this state until timeout is completed or a notification is received, at which point, it will move back to runnable.

- Terminated - If the thread exits normally (all the code has been executed) or there is an error, the thread will terminate.

|

| (Image of a Thread Lifecycle from Lifecycle and States of a Thread in Java - GeeksforGeeks) |

Daemon Threads

Java offers two different types of threads, user threads and daemon threads. User threads are high-priority threads and as such the JVM will wait for them to complete their task before terminating. In contrast, daemon threads are low-priority threads that are designed to only to provide services to user threads and will not prevent the JVM from exiting if all the user threads tasks are complete. This means that infinite loops (which are typical in daemon threads) won't cause problems as any code will not be executed if the user threads have terminated.

Daemon threads are useful for background supporting tasks such as releasing memory of unused objects and removing unwanted entries from the cache.

Creating a Daemon Thread

To set a thread to be a daemon thread in Java, all you need to do is call Thread.setDaemon() as seen below:

NewThread daemonThread = new NewThread();

daemonThread.setDaemon(true);

daemonThread.start();

Java Memory Model

The Java Memory Model (JMM) specifies how the JVM works with the computer's Random Access Memory (RAM). It specifies how and when different threads can see values written to shared variables by other threads and how to synchronise access when it is needed.

|

| Image of JMM from Java Memory Model (jenkov.com) |

Each thread has its own thread stack containing information about the methods the thread has called to reach the current point (known as the call stack). As the thread executes, the call stack changes. The thread stack also contains any local variables for each method being executed and a thread can only access its own thread stack. Even if two threads are executing identical code, the two threads will create the local variables of that code in their own stack.

The heap contains all objects created in the application, regardless of thread which can be accessed by all threads that have a reference to the object. When a thread accesses an object, it can also access that object's member variables. If different threads call a method on the same object at the same time, it can get access to that object's member variables, but each thread will have its own copy of the local variables.

A computer also contains a main memory area (RAM) which all CPUs can access. This is typically much bigger than the cache memories of the CPUs. When the CPU needs to write a result back to the main memory, it will flush it from its internal register to the cache, which in turn at some point will flush the value back to the main memory. This architecture is illustrated below:

|

| Image of computer architecture taken from Java Memory Model (jenkov.com) |

The hardware memory architecture doesn't distinguish between thread stacks and heap. On the hardware, both are stored in the main memory.

In order for other threads to see updates to a shared object volatile declarations must be used. This makes sure that a variable is read directly from main memory and is written back to main memory when updated.

Waiting Scenarios

If a process requests a resource that is not presently available, the process waits for it. Sometimes, it never succeeds in getting access to the resource. This can lead to one of three main scenarios:

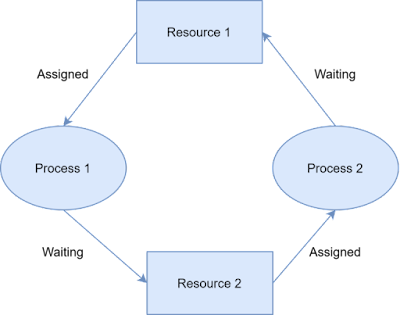

- Deadlock

A deadlock is a situation where processes block each other and none of the processes can make any progress as they wait for the resource held by the other process.

|

| An illustration of deadlock from Deadlock, Livelock and Starvation | Baeldung on Computer Science |

In order for a scenario to be characterised as deadlock. It must meet the following four conditions:

- Mutual Exclusion - At least one resource must be held by a process in a non-sharable mode. Any other process requesting that resource needs to wait.

- Hold and Wait - A process must hold one resource and requests additional resources held by other processes.

- No Pre-emption - A resource can't be released by force from a process.

- Circular Wait - A set of processes exists in a manner that the first process is waiting for a resource held by another which is held by another and so on, with the final process waiting for a resource held by the first process.

- Livelock

A livelock is a situation where the states of the processes constantly change. and still depend on each other, meaning that they can never finish their tasks.

|

| An illustration of livelock from Deadlock, Livelock and Starvation | Baeldung on Computer Science |

In the illustration above, both Process1 and Process2 need the same resource. They both check whether the other is in an active state and if so, passes the resource to the other process. However, as both are active, they both keep handing over the resource to each other in perpetuity.

It's like when two people make a phone call to each other and find the line's busy as they are calling one another. They then hang up and try again, once again at the same time which gets them into the same situation!

- Starvation

Starvation is the outcome of a process that can't gain regular access to the shared resources it requires and so it is unable to make any progress. This can occur as a result of live- or deadlock or another scenario entirely when a resource is continuously denying access to a process.

Atomic Operation

Atomic operations are program operations that run completely independently of any other process. This works by exploiting low-level atomic machine instructions such as compare-and-swap (CAS).

- The memory location being operated on (M).

- The existing expected value (A) of the variable.

- The new value (B) which needs to be set.

When multiple threads attempt to update the same value through CAS, one wins and updates the value. However, unlike locks, no thread gets suspended, they are just informed that they couldn't update the value.

In Java, the most commonly uses atomic variable classes used are AtomicInteger, AtomicLong, AtomicBoolean and AtomicReference which represent an int, long, boolean and direct object reference respectively which can be atomically updated.

The Executor Framework

Java's Executor framework provides thread pool facilities to Java applications. A thread pool is a pool of already created worker threads ready to work. In the case of the Executor framework, this contains the Executor interface with the method execute (Runnable task) and ExecutorService which extends Executor to add thread pool management facilities such as shutting down the thread pool as well as various life-cycle facilities.

Concurrent Collection Classes

The java.util.concurrent package includes a number of additions to the Java Collections Framework to help avoid memory consistency errors (when different threads have inconsistent views of the same data). These include:

- BlockingQueue - a first-in-first-out data structure that blocks or times out when you attempt to add to a full queue or retrieve from an empty queue.

- ConcurrentMap - defines several useful CAS atomic operations.

- ConcurrentNavigableMap - a subinterface of ConcurrentMap that supports approximate matches.

Comments

Post a Comment